Introduction

This two-part blog post explores the Amazon Alexa APIs and their applicability for in-vehicle use.

Part I of the series provided an overview of Alexa and its current uses in an automotive context. Part II describes specific Alexa functions and how they can be used in automotive systems, and then provides a possible implementation architecture with a focus on using Alexa in the vehicle.

Architecture

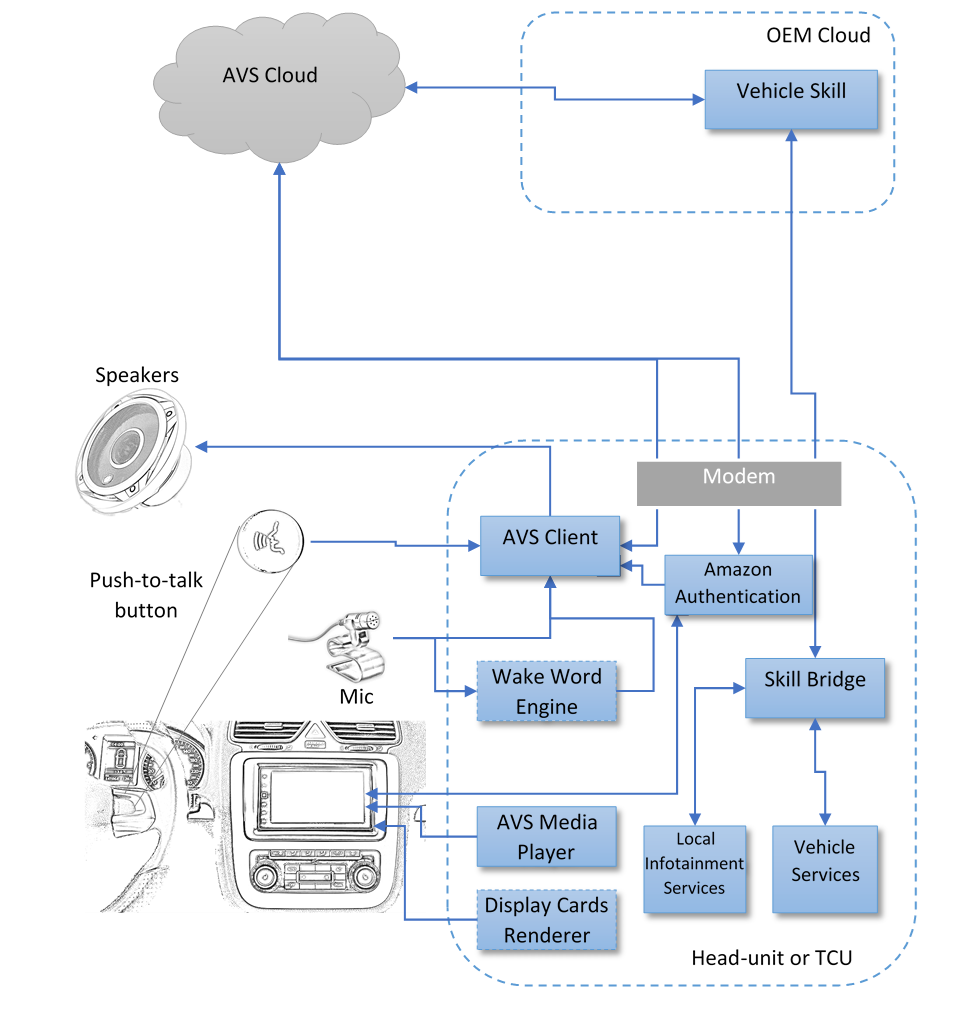

In the previous post we described how the vehicle can be controlled from home using telematics services. The figure below shows a possible architecture for integrating Alexa Voice Services into a car environment. The goal of this architecture is to allow drivers and passengers in the car to control and interact with the vehicle via Alexa.

The Alexa client application can run on either the in-vehicle head-unit or the telematics control unit (TCU). As Alexa is a pure cloud-based solution, the system requires internet connectivity to function. In this case the assumption is that the car has connectivity through a built-in modem. However, connectivity can also be provided via the smartphone using solutions such as Abalta’s SmartLink.

What follows is a description of the main components from the diagram and their roles in integrating Alexa Voice Services into a car environment (note that some components are optional).

AVS Client

The AVS Client is the main component that implements communication with the Amazon cloud using the Alexa Voice Services (AVS) APIs. It handles the audio path, taking input from one or more microphones (typically with noise cancellation ability) and playing the audio result returned by AVS via the car speakers. This component is responsible for mixing and/or prioritizing the audio with other local sources that the user might be listening to (radio, media player, etc.). The AVS client can be activated using a push-to-talk button (e.g. on the steering wheel) or by a voice command that is recognized by the Wake Word Engine.

To communicate with the Amazon cloud, the AVS Client needs authentication tokens that identify the user and the specific communication session. These tokens are provided by the Amazon Authentication module. For more information, see: https://developer.amazon.com/alexa-voice-service, which offers documentation, sample codes and examples.

Wake Word Engine

This is an optional speech recognition component that is constantly “listening” for a wake word (e.g. “Alexa”). Once detected, it notifies the AVS Client to start sending voice data to the cloud for processing. The Wake Word Engine is typically implemented using an on-board speech recognition engine specifically tuned to detect a predefined keyword from the microphone input. It should be efficient and use little computing power with a high degree of accuracy for wake word detection.

Amazon does not currently provide a wake word engine. The integrator needs to source a third-party engine from companies like Sensory, Nuance or Kitt.ai. Sensory and Kit.ai offer free engines for evaluation purposes on github: https://github.com/Sensory/alexa-rpi and https://github.com/Kitt-AI/snowboy.

Amazon Authentication

This module is responsible for user authentication with Amazon and the handling of communication tokens. While a headless device can be authenticated using a companion application, in the case of a head-unit the authentication can be done on the device itself by asking the user to login with their Amazon account. Using that information, the module obtains the necessary authentication tokens and passes them to the AVS Client module.

Each new Alexa device type first needs to be registered on the Amazon Developer’s portal. Amazon generates client IDs and a secret key that is used by the client to identify itself.

There are plenty of examples and tutorials provided by Amazon that describe how to do the authentication:

https://developer.amazon.com/docs/alexa-voice-service/authorize-on-product.html

AVS Media Player

This module is responsible for handling media playback data received via the AVS Client.

With AVS Device SDK v1.1, Amazon has expanded music service provider support to include TuneIn, Pandora, SiriusXM, and Amazon Music. The SDK also includes support for Audible, Kindle Books and agents to control playback, parse playlists, and manage alerts.

If the user requests to play music or listen to audiobooks through Alexa, the music data and the associated metadata will come through the AVS Client. This module is responsible for processing the data, handling the state, displaying the status to the user, and more. In addition to audio data, the client will receive metadata such as artist, song, album, etc. The AVS Media Player can render this information on the head-unit screen and integrate the media content like any other local or remote content. Any user controls from the touch screen or steering wheel are handled by this module and forwarded to the AVS Client.

Display Card Render

The Display Card Render module is responsible for rendering the display cards returned by the AVS Client as a response to requests. Display cards are metadata returned by AVS (in form of JSON data structure) that includes information for weather forecasts, calendar updates, search results, shopping lists, etc. The media player display cards are handled by the AVS Media Playermodule. For Alexa to return display cards for the given device, the developer needs to enable the Display Cards capability in the Alexa Developer Console.

Amazon provides sample code and guidelines on how the visual data should be presented: https://developer.amazon.com/docs/alexa-voice-service/display-cards-overview.html

Vehicle Skill

Vehicle Skill is a server-side module that allows the creation of an enhanced user experience specific to an individual vehicle. It enables the user to issue voice commands to query or even control the vehicle, above and beyond the standard services provided by Amazon. The user needs to enable the skill for the in-vehicle device and reference the skill using the registered skill name. For example, the skill can enable the user to calculate a route using the local navigation system to the nearest gas station, by saying “Alexa, ask <Vehicle Skill Name> to calculate a route to the nearest gas station”.

The skill is built using the Alexa Skills Kit (ASK). Via the Amazon provided APIs, the skill can obtain certain information about the device such as its identity as shown here: https://github.com/alexa/alexa-skills-kit-sdk-for-nodejs#device-id-support. The skill can also obtain the device address as described here: https://github.com/alexa/skill-sample-node-device-address-api. The address is entered manually by the user via the Alexa companion app, and as such is not accurate for moving vehicles. A more accurate source of real-time positioning information from the vehicle is therefore necessary. In the proposed architecture, this is achieved through the Skill Bridge component that runs on the in-vehicle side. The bridge communicates with the vehicle skill through a separate channel using a secure protocol. The bridge can provide up-to-date vehicle position information as well as vehicle status (e.g. climate control status, media player status, engine status, etc.). Using the vehicle status, the skill can offer information and intelligent responses to user commands. When the user wants to request a command for the vehicle, the skill processes the command and then sends it to the bridge component. When it receives the response back from the bridge, it generates a text-to-speech response and possibly sends a visual display card to the user.

Skill Bridge

This is a component that runs on the in-vehicle side and communicates with the back-end, specifically the Vehicle Skill component. It provides vehicle status (upon request) and executes commands received from the skill.

The bridge can communicate with the back-end over an existing telematics infrastructure or a dedicated parallel channel.

On the in-vehicle side, the Skill Bridge communicates with the Local Infotainment Services component to control services on the head-unit such as navigation, media player, radio, and volume. It also communicates with the Vehicle Services component to receive vehicle status and control some of the vehicle functions such as climate control and lights.

Local Infotainment Services

Local Infotainment is an optional component that interfaces with the Skill Bridge and the various infotainment services that can be controlled by Alexa. For example, it could integrate with the navigation system in the head-unit and expose the API to allow the bridge to request a route. It can return a status of the route and update the bridge with the estimated time of arrival.

This component can also integrate with the radio and media player functionality on the head-unit and allow it to be controlled via Alexa voice commands through the Vehicle Skill.

Vehicle Services

This optional component interfaces with the Skill Bridge and the various vehicle services that can be controlled by Alexa. For example, it could integrate with the climate control system (e.g. via CAN bus messages) and update the bridge with the inside / outside temperature and current climate control settings. It can expose the API to the bridge to allow it to set certain climate control functions from the Vehicle Skill as a result of a user’s voice commands.

This component could also collect vehicle diagnostics data, engine status, tire pressure status and related data and send it to the Vehicle Skill via the bridge component. The skill can use the information to send notifications to the user and even integrate with an OEM’s CRM system to suggest or schedule service appointments.

Device Location

As mentioned in the Vehicle Skill section, the Alexa API is currently limited regarding device location information, and there is no way for AVS clients to update the device location using API. The only way this can currently be done is by the end-user via the Alexa companion app or website. The location also needs to be geocoded, i.e. it needs to be presented in the form of a street address. For in-vehicle use, where location often changes, the current API is not enough. This functionality shows Alexa’s origin as a home device, where constant movement is typically not an issue.

A workaround for this limitation can be found by using a custom skill and a parallel channel, as described in the proposed architecture using the Vehicle Skill and Skill Bridge components. This approach, however, will not work for standard Amazon services such as weather or place search. They will still use the location entered manually by the user, even if the vehicle is moving and the custom skill has the latest location.

This is a major limitation and we expect that Amazon will soon provide an API to allow such devices to update their location often and provide geo-coordinates (longitude / latitude) without requiring a geocoded address.

Conclusion

Although Alexa started as a home automation device with an open API and ecosystem, it can be also used successfully in an in-vehicle environment. As described in this two-part series, with custom skills and the open Alexa Voice Services APIs an in-vehicle system can be turned into an Alexa device that controls services inside the vehicle and delivers value for the driver and passengers. Due to its popularity and extensibility (not to mention the fact that it is currently free), we expect to see many Alexa implementations in the vehicle coming soon.